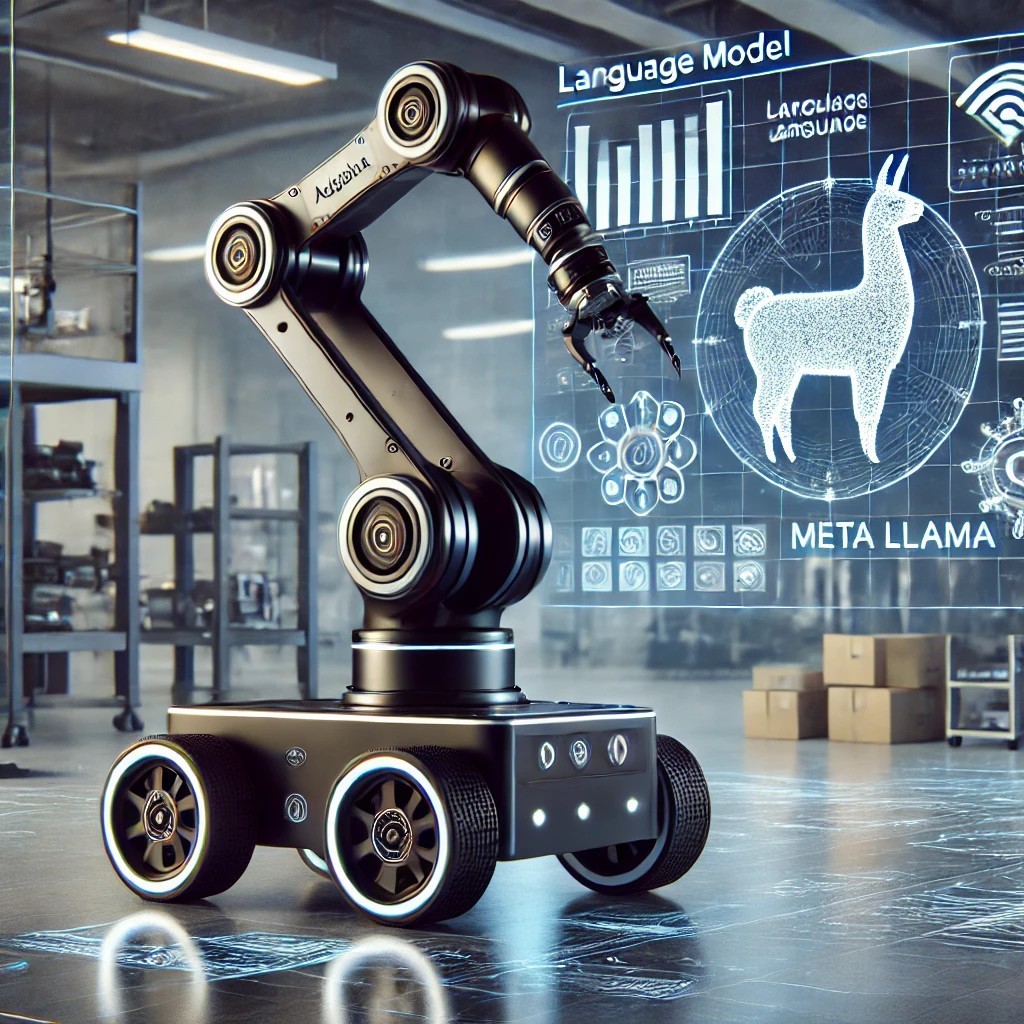

In the laboratory for cyber-physical systems at the TH Köln (Gummersbach campus) there is a mobile robot with a gripper https://global.agilex.ai/products/limo-corot that can be programmed in Python to move around, pick up various objects and place them somewhere else.

A Large Language Model (LLM) is to be implemented so that the robot can be controlled textually and/or by voice using simple commands such as “Find all the pens in this room and place them in the middle of the room”. In other words, the LLM must be able to interpret the text and image from a camera attached to the robot and output code to control the robot.

First, a literature search should be carried out, as there are already several research papers in this area, which also provide open source implementations and libraries on github. For example, RAI or ROSA.

Your task is to familiarise yourself with at least one of these libraries or other projects and test whether it can be used for the given application. Once you have found a candidate that you think is suitable, your task is to apply it to the real robot. Finally, you will test your application using commands such as those given in the problem description. The programming language should be Python throughout. The LLM and other algorithms for object detection, robot localisation, … should be run locally on a computer in the lab with a powerful GPU, and do not need to run directly on the robot. The LLM and other deep learning models (e.g. for object detection or speech-to-text) do not need to be trained and can be used off-the-shelf. Depending on the size of the project and the number of students, there is also the possibility to develop a user interface, add voice control and test several different LLMs and their performance vs. latency.

The size of the project (6 CP or 12 CP) can be decided together with the students at the beginning of the work. The results of previous guided projects and other work in the lab on similar projects can be used.

Conduct a literature review on a hot topic in robotics and deep learning. Become familiar with a potentially large Python project and adapt it for the desired integration with real hardware. Integrate several deep learning models and other advanced robotics algorithms into a real application. Work with real hardware: robot, gripper, camera, and other sensors. Prompt engineering for a LLM.

Python, Deep Learning, (Computer Vision, robotics)

If there is an external partner, give the contact person(s) and a brief description here; otherwise leave blank